Did I Miss The Memo?

In the current issue of The Atlantic, Brian Christian writes that

As computing technology in the 21st century moves increasingly toward mobile devices, we’ve seen the 1990s’ explosive growth in processor speed taper off.

That is probably wrong.

- In 1990, a Mac IIfx had 40MHz 68030.

- In 2000, a PowerMac G4 had a 500MHz G4.

- In 2010, a Mac Pro has eight Nehalem cores, each about 3GHz. Plus a graphic processor that’s much more powerful on its own than that G4.

We’re getting close, but Moore’s Law hasn’t been repealed yet. Perhaps we care more about mobile computing now, perhaps we don’t worry as much about processor speed. But that processor speed is there, and it’s still growing. All those nifty little animations that make the iPad seem so simple and pretty? That’s all about processing speed.

Where were the fact checkers, anyway?

Christian’s essay, which is mostly about the Loebner Prize (for chatbots that can come close to passing the Turing test), seems oddly concerned with gimmicks that programs can use to briefly mimic sapience. Yes, an argumentative chatbot (or a dilatory, absent-minded one) might keep an observer in doubt for a minute or two. That’s nice – and it might win you a prize – but that’s not the point.

If you can sit down with a computer and talk about stuff – whatever you like – for a reasonable time, and you can’t really be sure it’s not a person, then how can you know it’s not thinking? That’s Turing’s point, after all. We all agree that kids think; they might not be ideal dinner partners, but they aren’t rocks or forklifts. We all agree that, if rocks and forklifts think, they provide us no evidence of their inner life. That’s what the Turing test is about: when the forklift can give you the same evidence of thinking that leads you to believe that a kid or a taciturn stranger is sentient, then you’d best behave as if the forklift is sentient, too.

Taking a cue from Christian’s dismissive closing argument, if you can sit down and talk about stuff with a machine, and if you happen to like what it says, why can't you think of it as a friend? If what it says strikes you as inspiring and illuminating, why not consider it an artist? If you learn from it, why not regard it as a teacher? Christian seems to think that’s inherently absurd – and, for the simple-sounding chatbots he seems to have encountered, perhaps it is.

If you think it cannot possibly happen, it seems to me you’re a vitalist, assuming as a given then only some mystical soul can allow thought.

Oddly, though the article says it concerns artificial intelligence, none of the programs involved are described in any detail. We don’t know how they work or what they know. Most seem to be trying to exploit loopholes in the field rules, such as a 5-minute limit, that could entitle them to a prize even though they wouldn’t really be passing the Turing test.

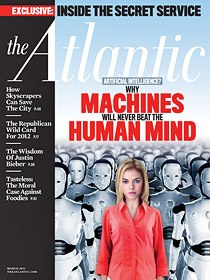

The cover headline,

Why MACHINES will never beat the HUMAN MIND

appears to have nothing at all to do with the article.