Visualization

Via J Nathan Matias, a pair of impressive Javascript visualization libraries: the Javascript InfoVis Toolkit and ProtoVis.

I’ve been working on understanding a core hypertext problem that few people discuss: thy is the Web still large? We can imagine a world in which almost all the traffic on the Web goes to a few very large sites, and everybody else gives up and goes home. That’s the world a number of experts expected ours would turn out to be – at least, not yet. What sorts of browsing behavior and what kinds of linking and search engines prevent all the attention from concentrating at a handful of URLs?

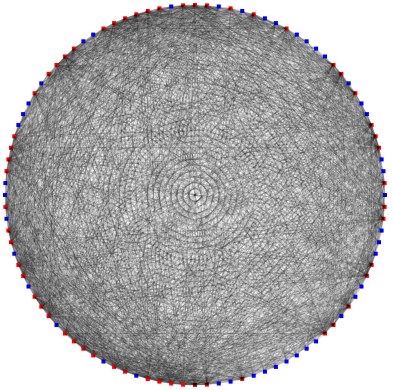

Here’s one of my simulations. We have 100 weblogs, represented as colored dots. Each starts with links (represented here as a thin line – to three neighbors. Every day, each author browses the “web”, following some links from their own weblog and discovering new weblogs. From time to time, they add a newly-discovered link to their own weblog. Occasionally, they drop an old link. (The model still has a lot of moving parts.)

After a couple of simulated years, we still have a fairly vibrant little corner of the blogosphere. But in this example, almost 40% of the sites – the blue dots – receive no links at all. Since our little world has no search engines, no email and no advertising, there’s no way for those forgotten sites to be rediscovered.

Different parameters lead to different outcomes. At one extreme, only a few sites wind up with traffic. At the other extreme, everything ends up linked to everything else and nobody gets very much attention. But there’s a surprisingly broad parameter space where some sites get lots of traffic, some sites get less, but we maintain a nice distribution – and low-traffic sites can sometimes get hot and displace the sites that happen to be above them in the pecking order.

That’s the Web we want; I’d like to know more about the conditions that allow it.