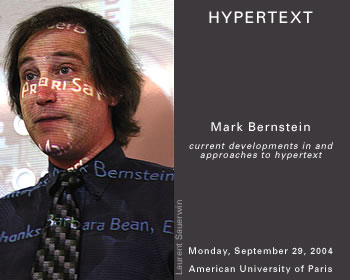

The theme of my talk at American University this afternoon was a simple puzzle.

When we make exciting new software, we aren't just trying to make things slightly better. We're trying to change everything, to make things a lot better. These are big aspirations, but we've sometimes succeeded.

- Get on a plane, and everyone around you is using a souped-up version of Alan Kay's Dynabook. I remember when laptops were someone's doctoral dissertation.

- Go to a store, pick something up. Chances are good, it's got a URL on it. The salad has a URL. They're scanning Harvard's library. The Web is, literally, everywhere.

- Everyone on the Metro has an iPod. More iPods than cell phones. That's a ton of music, and (I expect) a ton of Web-delivered art.

- That's not to mention things like the number of people whose lives were changed after reading a novel that wouldn't have been written if the author had to wrestle with typescripts, or the number of business blunders prevented by ubiquitous spreadsheets.

So, this is what we're trying to do. But we also want to know we're making things better, we want to know when it's right. We want to know it.

And our techniques for measuring software quality, while good for measuring incremental improvements, are essentially blind to major successes. Terrific outcomes are bound to be rare: it's just too much to expect to make a terrific difference for nearly everybody. Statistical methods -- drag races, usability studies -- will never see more than one. And, if you see one terrific outcome in a sample of, say, 25 tests, you're almost certain to reject it as an outlier or a special case or a failure.

And if you don't, the reviewers will.

Perhaps the model here should be medicine -- another discipline that, like computer science, is essentially a craft (and sometimes an art) with aspirations to scientific seriousness -- to know and to demonstrate that the solution is good rather than simply to believe it so. The medical literature has long had a place for rare diseases and unexpected outcomes.

Perhaps I'm reading the wrong literature. But it seems to me that we're a lot better at finding out whether this widget is 5% faster than that widget, than we are at learning about programs that can sometimes change everything

Paris is rich in small, specialized shops. They often cluster together, as they did in the Middle Ages. Rue Dante, today, is a center for comics and role playing games. You constantly bump into specialized bookstores. And, of course, the bread store, the pastry store, the vegetable store, the butcher -- all separate.

Paris is rich in small, specialized shops. They often cluster together, as they did in the Middle Ages. Rue Dante, today, is a center for comics and role playing games. You constantly bump into specialized bookstores. And, of course, the bread store, the pastry store, the vegetable store, the butcher -- all separate.