Today, my computer is your computer. We all have, pretty much, the same computer. Yours might be a year or two newer. Mine might be green.

photo: Heidi Kristensen

Today, your software is my software. Some details might change: maybe you use Mellel and I use Word, or you use Excel and I use Numbers. Small differences matter. But it's all pretty much the same. People expect that they won't need to read a manual, that everything is just like it's always been and that nothing ever changes much.

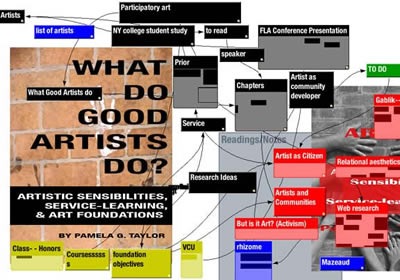

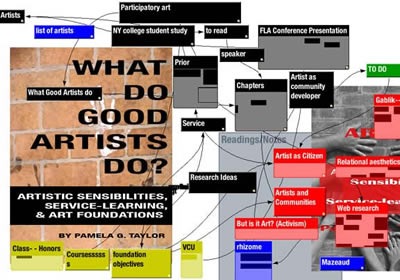

Tinderbox screenshot. Pamela Taylor, Virginia Commonwealth University

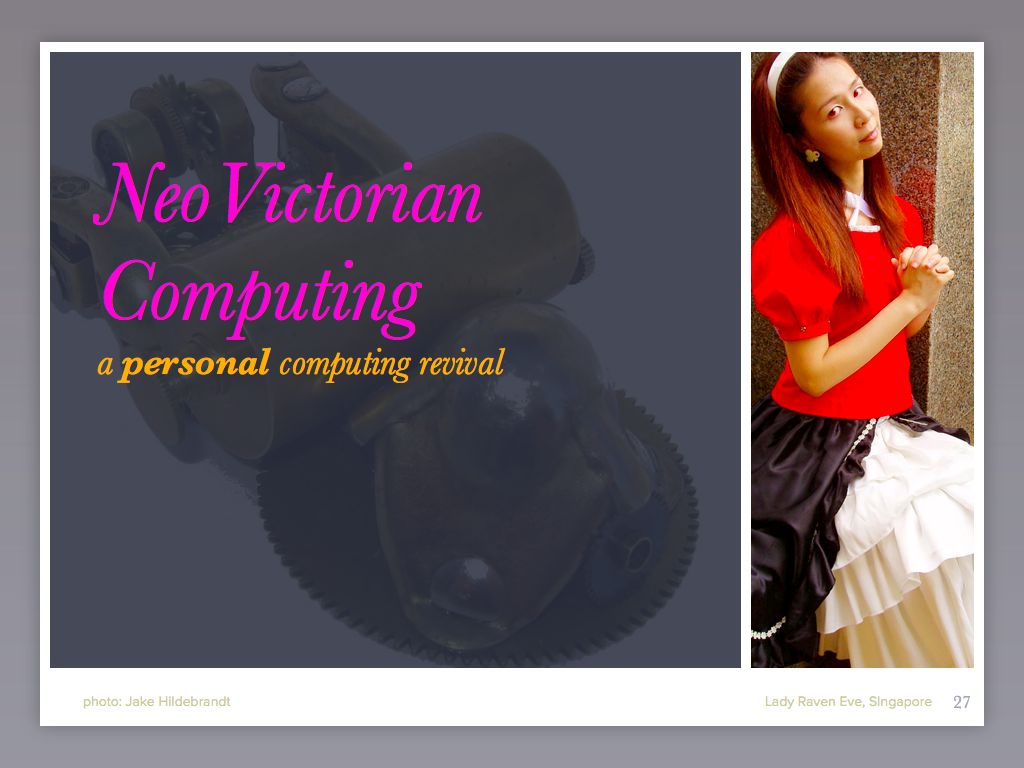

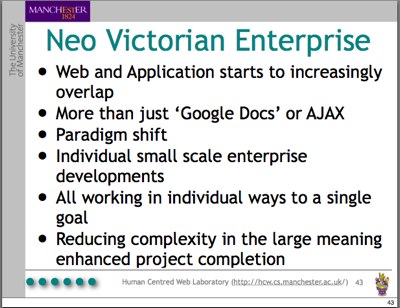

I want this to change. I want a software world where we might again enjoy new software that does things we couldn't do before. I want software that fits specific needs. I'm a software professional; why should I be using the same tools as a sixth grader, or a professional photographer, or an interior decorator?

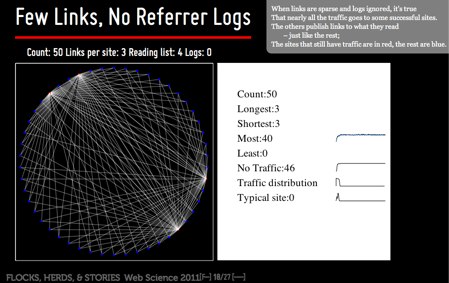

Why do we have so little variety in our software? One reason is that we ask it to do what it cannot, and we expect to do too little.

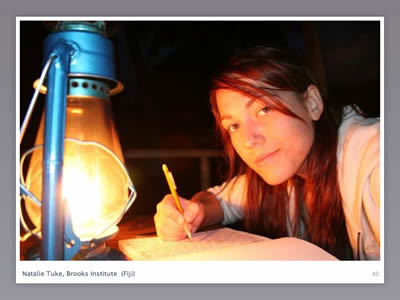

We should expect to learn. Sophisticated tools require study and effort, and they repay that effort by letting us do things we could not do otherwise. Calculus is a lot of work, but you can't understand physics or the stock market until you understand derivatives. Learning to draw the figure is a lot of work; once you do the work, you can draw.

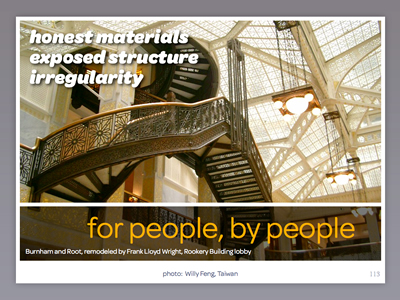

Users and software designers should embrace personality and style. Software made by committee must adhere to the committee's standards, but software made by people and made for people may be infused with the creator's personal style just as it is adapted for the user's personal needs.

We should accept failure. Software fails in many ways. We have tried to change this, we have made great progress, but it is the nature of software to fail.

We once thought that there should be no errors, that errors were a sin. Errors are natural. I suspect we routinely spend $100 in development to catch errors that would cost our users $10. I know we routinely spend thousands of dollars to forestall cosmetic errors that will cost our users nothing save transient aesthetic annoyance. Operating systems are not the appropriate standard; since everyone uses the operating system all the time, and since operating system failure probably collapses the user's entire house of cards, it makes sense to over-engineer operating systems.

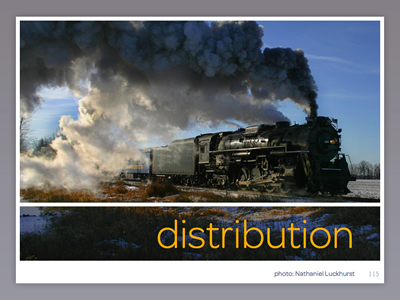

In the 19th century, British railroads went bankrupt building the rail system they wanted — with level grades and safe crossings and solid infrastructure. American railroads built cheap and fast, cutting corners with abandon. They accepted that they'd need to rebuild the worst parts in ten or twenty years, while the British rails would still be in fine condition a century later. The American answer was the right answer: yes, some American routes were built and rebuilt, and American rails suffered accidents and breakage, but some of those super-engineered routes are now little commuter spurs. Some are abandoned.

photo: Nathaniel Luckhurst

It's hard to know what is a defect, and what is merely a surprise. The cult of usability has enshrined the belief that anything a novice doesn't expect is a defect. If we're just interested in how many copies we can sell to novices, usability matters. If we're interested in utility

To follow knowledge like a sinking star,

Beyond the utmost bound of human thought.

then novice usability is a smaller component. I don't care whether the perspective of the application icons is consistent: I care whether they give me the information I need and offer the affordances to help me learn more and do more.

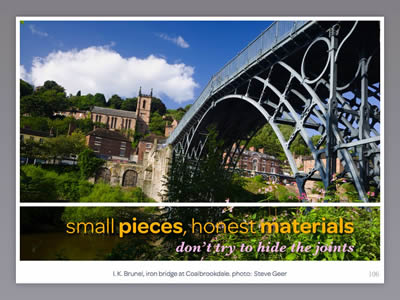

In the 19th century, Arts And Crafts sought an alternative to the seamless simulacra of mass production. Instead of using uniform dishes from The State Dish Factory, couldn't your dishes be made for you, and mine for me? There is no free lunch: if we want dishes made just for us, we accept that they'll cost more. And since the point is that the dishes are made for you by people, not by the State Dish Factory, we accept (and enjoy) things that come along with human involvement: they won't all be identical, we might sometimes see fingerprints, and sometimes we might detect the trace of the particular maker and their particular situation on a particular day.

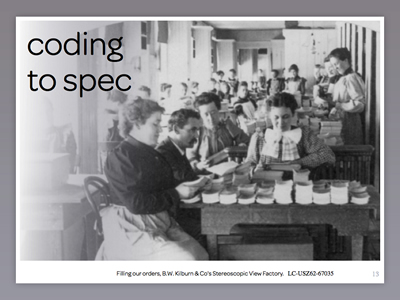

It didn't quite work with Arts and Crafts, though plenty of artisan work continues today. The cost difference was too large. Worse, distribution was impossible with manual office procedures: it was barely possible to fill individual orders for identical commodities, and handling unique cases would have required armies of order clerks.

Filling our orders, B.W. Kilburn & Co's Stereoscopic View Factory. LC-USZ62-67035

But software is the stuff of thought. We customize it all the time, through preferences and scripts and macros and plug-ins. We can, and should, embrace the workshop, and learn to treat our software as an artifact and also as material we shape to fit our needs.

It's made by people; it will have brushstrokes and thumbprints. It is what it is: it expresses its nature instead of hiding behind brushed metal shams. It is made for us, and if the maker did not always anticipate everything, we respect the effort and the intention, and we take some responsibility for picking up the pieces.